LogReduce Values

The LogReduce Values operator allows you to quickly explore structured logs by known keys. Structured logs can be in JSON, CSV, key-value, or any structured format. Unlike the LogReduce Keys operator, you need to specify the keys you want to explore. The values of each specified key are parsed and aggregated for you to explore.

This operator does not automatically parse your logs. You need to parse the keys you want to explore prior to specifying them in the LogReduce Values operation.

The following table shows the fields that are returned in results.

_cluster_id | _signature | _count |

|---|---|---|

| The unique hash value assigned to the signature. | The group of specified key-value pairs with their count and percentage of occurrence in relation to the group _count. | The number of logs that have the related signature. |

The field is a clickable link that opens a new window with a query that drills down to the logs from the related schema.

With the provided results you can:

- Click the provided links to drill down and further explore logs from each schema.

- Compare results against a previous time range with LogCompare.

- Run subsequent searches.

Syntax

| logreduce values on\<key\>[,\<key\>, ...] [output_threshold\<output_threshol\>]

| _cluster_id | _signature | _count |

|---|---|---|

| The unique hash value assigned to the signature. | The group of specified key-value pairs with their count and percentage of occurrence in relation to the group _count. | The number of logs that have the related signature. The field is a clickable link that opens a new window with a query that drills down to the logs from the related schema. |

Details option

The details option allows you to investigate and manipulate logs from specific data clusters generated by a previously run LogReduce Values search.

There are two methods you have to use the details option:

Click on the

_countfield value from the LogReduce Values search results.

A new search is created with the necessary identifiers from your initial LogReduce Values search. The search contains all of the raw logs from the selected data cluster.

Manually provide the necessary identifiers. You can provide identifiers from previously run LogReduce Values searches. However, the only way to get the search identifier, given with the

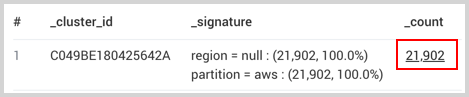

shortcodeIDparameter, is to click the_countlink from the interface. The query of the created search has the identifier that you can save for later use, up to 1,095 days. For example, the following query was created by clicking the_countlink:| logreduce values details on %"region", %"partition" pCV6qgaOvASgi1j9KhcGaE6mts6jfOEk "C049BE180425642A"The

pCV6qgaOvASgi1j9KhcGaE6mts6jfOEkvalue is theshortcodeID. WhileC049BE180425642Ais acluster_id.

Details Syntax

| logreduce values details on %\<key\>"[, %\<key\>", ...] \<shortcodeI\> \<cluster_id\>,\<cluster_id\>, ...]

| Parameter | Description | Default |

|---|---|---|

| keys | Comma separated list of keys that were parsed from structured data. | Null |

| cluster_id | The signature identifier that was provided from your initial LogReduce Values search. | Null |

| shortcodeID | The unique hash value assigned to the initial LogReduce Values search. | Null |

For example, to see details from a particular LogReduce Values search and data clusters, you'd use the following syntax:

| logreduce values details on %\<key\>"[, %\<key\>", ...] \<shortcodeI\> \<cluster_id\>,\<cluster_id\>, ...]

To see all the logs by cluster identifiers for further processing, you'd use the following syntax:

| logreduce values details on %\<key\>"[, %\<key\>", ...]\<shortcodeI\>

Limitations

- Not supported with Real Time alerts.

- Time Compare and the compare operator are not supported against LogReduce Values results.

- If you reach the memory limit you can try to shorten the time range or the number of specified fields. When the memory limit is reached you will get partial results on a subset of your data.

- Response fields

_cluster_id,_signature, and_countare not supported with Dashboard filters.

_count link

- Searches opened by clicking the link provided in the

_countresponse field:- are run against message time.

- can return different results due to variations in your data.

- When provided in a Scheduled Search alert, the link from the

_countresponse field is invalid and will not work.

Examples

AWS CloudTrail

In this example, the user wants to cluster AWS CloudTrail logs to understand errorCodes, like "AccessDenied", eventSource, like Ec2 or S3, and eventName. This can reveal patterns such as certain eventSources contributing more errors than others.

_sourceCategory= *cloudtrail* errorCode

| json field=_raw "eventSource" as eventSource

| json field=_raw "eventName" as eventName

| json field=_raw "errorCode" as errorCode

| logreduce values on eventSource, eventName, errorCode

Kubernetes

After using LogReduce Keys to scan your logs for schemas you can use LogReduce Values to explore them further based on specific keys.

_sourceCategory="primary-eks/events"

| where _raw contains "forge"

| json auto "object.reason", "object.involvedObject.name", "object.message", "object.involvedobject.kind", "object.source.component", "object.metadata.namespace" as reason, objectName, message, kind, component, namespace

| logreduce values on reason, objectName, message, kind, component, namespace

Next, use LogExplain to determine how frequently your reason is FailedScheduling.

AWS CloudTrail

The following query helps you see which groups of users, IP addresses, AWS regions, and S3 event names are prevalent in your AWS CloudTrail logs that reference AccessDenied errors for AWS. Knowing these clusters can help plan remediation efforts.

_sourceCategory=*cloudtrail* *AccessDenied*

| json field=_raw "userIdentity.userName" as userName nodrop

| json field=_raw "userIdentity.sessionContext.sessionIssuer.userName" as userName_role nodrop

| if (isNull(userName), if(!isNull(userName_role),userName_role, "Null_UserName"), userName) as userName

| json field=_raw "eventSource" as eventSource

| json field=_raw "eventName" as eventName

| json field=_raw "awsRegion" as awsRegion

| json field=_raw "errorCode" as errorCode nodrop

| json field=_raw "errorMessage" as errorMessage nodrop

| json field=_raw "sourceIPAddress" as sourceIp nodrop

| json field=_raw "recipientAccountId" as accountId

| json field=_raw "requestParameters.bucketName" as bucketName nodrop

| where errorCode matches "*AccessDenied*" and eventSource matches "s3.amazonaws.com" and accountId matches "*"

| logreduce values on eventName, userName, sourceIp, awsRegion, bucketName

| sort _count desc

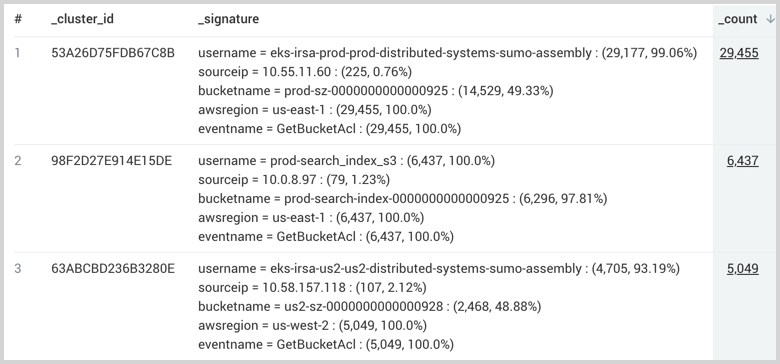

Results show each unique signature:

Next, use LogExplain to analyze which users, IP addresses, AWS regions, and S3 event names most explain the S3 Access Denied error based on their prevalence in AWS CloudTrail logs that contain S3 Access Denied errors versus logs that don't contain these errors.

Drill down

If the user wants to drill down on a particular logreduce values query id and cluster id, we provide a link that leads to the following query.

_sourceCategory="aws/cloudtrail/production" and _collector="AWS"

| json "eventName", "eventSource", "awsRegion", "userAgent", "userIdentity.type", "managementEvent", "readOnly"

| logreduce values-details\<shortcodeI\> [clusterId\<cluster i\>]

If the user wants to label the raw logs by their cluster ids for further processing, they can use the following query:

_sourceCategory="aws/cloudtrail/production" and _collector="AWS"

| json "eventName", "eventSource", "awsRegion", "userAgent", "userIdentity.type", "managementEvent", "readOnly"

| logreduce values-details\<shortcodeI\>

| ...

Parameters to the logreduce values-details operator:

shortcode_id, required: this is used to link to the serialized histograms and fetches them. Once fetched, they are used to recluster the logs in the same query and match them to their respective clusterscluster_label, optional: The cluster label is passed as a query parameter to the logreduce values-details operator which can take either a list of labels as a parameter where it only matches the logs against the histograms of those labels or no labels where we return all logs with a new field which is the cluster label, on which we can run the future logexplain operator downstream. Only for drilling down, we would pass the cluster label to this operator which would return non-agg output of all logs with that cluster label.

Drill down output:

Non-agg output, with the same schema as the input format, but added an extra “cluster” column which denotes cluster membership.